There is a, now famous, quotation from the American Secretary of Defence, Donald Rumsfeld, in which he says (put in a slightly clearer way):

There are known "knowns." These are things we know that we know.

There are also known unknowns - that is to say there are things that we now know we don't know.

But there are also unknown unknowns. These are things we do not know we don't know.”

Though this sounds like a riddle, the quotation actually encapsulates some real truths that are certainly applicable to astronomy. In the Gresham lectures I have spent my time telling you about the things that we know and that we are pretty certain about – the “known knowns” and I have certainly implied that there are things that, at the present time, we have no inkling of at all – the “unknown unknowns”. But we also have “known unknowns” – things that we know must exist or happen to allow our universe to exist but of which we have no real understanding. In this lecture we will look at some of the, as yet unsolved, mysteries of the Universe and describe the scientific instruments that are now coming into use or are in the planning stage that will help to unravel them.

Matter, Anti-matter and Dark Matter (1): what gives matter its mass?

This is one of the key questions in Physics today and vast sums of money are being spent in trying to answer it! In the 1960’s, Peter Higgs and others postulated that, very shortly after the origin of the universe, a “field”, called the Higgs field came into existence that now permeates the whole of the Universe. It is believed that it is this field that gives mass to the particles that pass through it. It is not easy to explain how this works but an analogy may help. Imagine a crowded, post Oscar’s party. If you or I could somehow be present, no one would take any notice of us and we could move around pretty easily - as a rather insignificant being (in this context) we have little “mass”. Now the young lady who has just been awarded the “best actress” Oscar arrives. Everyone will congregate around her, congratulate her and want to shake her hand. Her passage through the room will be very slow - she has a lot of “mass”! The throng of people in the room simulate the Higgs field and slow down those trying to pass through it dependant on (in this case) their perceived importance. It is the interaction of the Higgs field and the fundamental particles that give them their perceived mass.

We all know about “electric” or “magnetic” fields which are produced by charged particles. It is thought that such a field arises due to the existence of what are called “Virtual Particles” and the strength of the field is simply a measure of the density of these particles. What do we mean by a virtual particle? They are particles that have no long term existence but come into being and can only exist for a very short time before disappearing again. The typical time of their existence is determined by the Heisenberg Uncertainty Principle (one of the fundamental tenants of Quantum Theory) which, in one formulation, states that the heavier the virtual particles that spring into existence the shorter the time for which they can exist. The particle that relates to the electric and magnetic fields is the photon. So within a space permeated by an electric of magnetic field there will be virtual photons and the “force” that, for example, attracts unlike poles or repels like poles is due to the exchange of these virtual photons! In the same way, we believe that the strong nuclear force between the quarks that make up neutrons and protons is the result of interaction of virtual gluons and the force that holds neutrons and protons together in nuclei is due to the exchange of virtual mesons such as the pi and rho mesons. So, one way of looking at the interaction of particles and the Higgs Field is the exchange of the virtual particle that is associated with it which, in the case of the Higgs field, is called the Higgs Boson.

If we could prove the existence of a Higgs Boson then the theory would be proven. But, remember, these particles are virtual particles - they do not hang around to be detected! However there is a trick that can, in principle, be played. A virtual particle has “borrowed” energy to come into existence and must almost instantaneously pay it back. However, if you could somehow give it sufficient energy during the brief moment of its existence it could become real. There is a real problem here. Though we do not know the energy that is required we do know that it is very high and, to date, have not been able to create sufficient energy in a sufficiently small volume of space to create a real Higgs Boson. Even then, it would decay almost instantaneously into a myriad of other particles - decay products - but it is hoped that precise analysis of these could prove that a Higgs Boson had had a brief moment of existence!

This is why a key objective of the Large Hadron Collider is to attempt to detect the Higgs Boson – regarded as one of the holy grails of Particle Physics and a necessary component of the Standard Model that attempts to describe the fundamental particles that make up our universe.

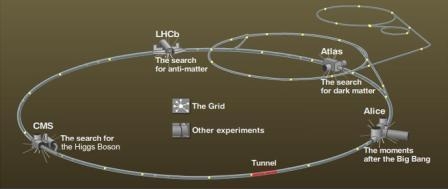

The Large Hadron Collider (LHC) is the world's largest and highest-energy particle accelerator and lies in a tunnel 27 kilometres in circumference beneath the Franco-Swiss border near Geneva, Switzerland. It is designed to collide opposing particle beams of either protons at an energy of 7 teraelectronvolts (TeV) per particle, or lead nuclei at an energy of 574 TeV per nucleus. The proton collisions are those that will be used in the search for the Higgs Boson whilst the lead nuclei collisions (that began in November 2010) have a bearing on the matter-antimatter problem that will be discussed later.

The collider tunnel contains two parallel beam pipes, each containing proton beams which travel in opposite directions around the ring. Over 1,600 superconducting magnets keep the beams focussed on their circular paths, which cross at four points where the two beams will interact. In total, 96 tonnes of liquid helium are used to keep the magnets at their operating temperature of 1.9 K (−271.25 °C), making the LHC the largest cryogenic facility in the world at liquid helium temperature! When operating at full power the protons will have an energy of 7 TeV and circulate though the rings at ~0.999999991 c, about 3 m/s slower than the speed of light and taking less than 90 microseconds to make one revolution. The protons are bunched together so interactions take place at discrete intervals of time so that the electronics in the detectors that are placed at the intersecting beam points can complete their measurements.

The detectors that will be used to search for the signature of the Higgs Boson are the ATLAS and CMS detectors. ATLAS is about 45 meters long, more than 25 meters high, and weighs about 7,000 tons. It is about half as big as the Notre Dame Cathedral in Paris and weighs the same as the Eiffel Tower! When running it produces a prodigious amount of data which, if all were recorded, would fill 100,000 CDs per second. In fact, very high speed computers analyse the data in real time and only record data when it looks as though an interesting event has occurred - a rate equivalent to 27 CDs per minute. The CMS detector is built around a huge solenoid magnet. This takes the form of a cylindrical coil of superconducting cable that generates a magnetic field of 4 teslas, about 100,000 times that of the Earth. The magnetic field is confined by a steel 'yoke' that forms the bulk of the detector's weight of 12 500 tonnes.

It has been said that the search for the Higgs Boson is not like looking for a needle in a haystack but one in 10,000 haystacks! It is thought that it could take several years before sufficient events to have taken place to prove, or otherwise, its existence.

Matter, Anti-matter and Dark Matter (2): Why do we have a Matter Universe?

In 1928 Paul Dirac took an important step towards bringing quantum physics into conformity with Einstein's special theory of relativity by devising an equation (now called the Dirac equation) that could describe the behaviour of electrons. This equation provided a natural explanation of one of the electron's intrinsic properties - its spin. [It is the change in the spin of an electron with respect to a proton in a hydrogen atom that gives rise to the 21cm hydrogen line that will play a key part in a later part of this lecture.]

Considering how his equation could be interpreted, in 1931 Dirac proposed that there should exist an 'anti-electron' - a particle with the same mass and spin as the electron but with the opposite electrical charge. By predicting the existence of this antiparticle, now called a positron, Dirac became recognized as the 'discoverer' of antimatter - one of the most important discoveries of the last century. In 1932, Carl Anderson, an American professor, was studying the tracks left by showers of cosmic ray particles in a Wilson cloud chamber. He saw a track left by "something positively charged, and with the same mass as an electron." Anderson had detected a positron and so proved that Dirac's prediction about antimatter was accurate.

Dirac asserted that every particle has an "antiparticle" with nearly identical properties, except for an opposite electric charge. And, just as protons, neutrons, and electrons combine to form atoms and matter, antiprotons, antineutrons, and antielectrons can combine to form antiatoms and antimatter. Worrying why our universe seems to be composed of matter only, his theory led him to speculate that there may even be mirror galaxies or universes made entirely of antimatter, but no experiments have yet been able to detect the antigalaxies or vast stretches of antimatter in space that Dirac imagined.

Should matter come into contact with antimatter (they are attracted to each other as they have opposite charges) it was discovered that they would instantly annihilate each other giving rise to a burst of radiation. This is the most efficient conversion of mass to energy possible and this is, no doubt, why it was used to power the Starship Enterprise and so allow it to travel at “warp” speeds.

The question that really confounds physicists today is why our universe appears to be made of matter only. The standard theories of physics say that when the universe came into existence some fifteen billion years ago in the Big Bang, the energy created must have formed equal amounts of matter and antimatter - the laws of nature require that matter and antimatter be created in pairs. But within less than a second of the Big Bang, matter particles somehow outnumbered antimatter particles by a tiny fraction, so that for, say, every billion antiparticles, there were a billion and one particles. All the antiparticles were annihilated giving rise to the radiation that makes up the Cosmic Microwave Background (then in the form of gamma rays). So, within a second of the creation of the universe, all the antimatter was destroyed, leaving behind only matter. So far, physicists have not been able to identify the exact mechanism that would produce this apparent "asymmetry", or difference, between matter and antimatter to explain why there arose this tiny excess of matter over antimatter.

Today, antimatter is created primarily by cosmic rays - extraterrestrial high-energy particles that form new particles as they penetrate the earth's atmosphere. It can also be produced in accelerators like that at CERN, where scientists create high-energy collisions to produce particles and their antiparticles. In one of the most recent CERN experiments, published in November 2010, a team has succeeded in producing a significant number of atoms of anti-hydrogen – made up of an antiproton and a positron: antiprotons at very low temperatures and thus almost stationary were first compressed into a matchstick-sized cloud 20 millimetres long and 1.4 millimetres in diameter and then a similar cold cloud of positrons were added giving rise to around 38 anti-hydrogen atoms which were successfully trapped inside a magnetic bottle for one sixth of a second. This is, for the first time, allowing scientists to study antimatter in detail and so may help to determine why matter appears to be in the ascendant.

Physicists believe that there must be a subtle difference in the way matter and antimatter interact with the forces of nature to account for a universe that prefers matter, but have not, as yet, been able to confirm this experimentally. In 1967, the Russian theoretical physicist Andrei Sakharov postulated several complex conditions necessary for the prevalence of matter. One of these is a "charge-parity" violation, which is an example of a kind of asymmetry between particles and their antiparticles in the way that they decay. The goal of the CERN scientists is to find evidence of that asymmetry.

The LHC Beauty (LHCb) detector is designed to answer a specific question: where did all the anti-matter go? In order to do this, the LHCb is investigating the slight differences between matter and antimatter by studying a type of particle called the "beauty quark". Although absent from the Universe today, these were common in the aftermath of the Big Bang, and will be generated in their billions by the LHC, along with their antimatter counterparts, anti-beauty quarks. 'B' and 'anti-b' quarks are unstable and short-lived, decaying rapidly into a range of other particles. Physicists believe that by comparing these decays, they may be able to gain useful clues as to why nature prefers matter over antimatter.

The LHC produces many different types of quark when the particle beams collide. In order to catch the beauty quarks, LHCb has developed sophisticated movable tracking detectors close to the path of the beams circling in the LHC. In September 2010, the LHCb observed what are called “beautiful atoms”. The atoms are bound states of the beauty quark and anti-beauty quark. [Quarks can only exist in triplets, such as in Protons (Up, Up, Down) and Neutrons (Up, Down, Down), or as quark-antiquark pairs.] The beautiful atom is 10 times heavier than the proton and has a size slightly smaller than the size of the proton.

A further approach to the understanding of what happened during the Big Bang would be to try to recreate the conditions that then existed. Since November 2010 the LHC has been used to collide lead ions, 208 times heavier than a proton and the first results have shown for the first time that the proton and neutrons that make up the nuclei can “melt” into their constituent quarks and gluons and so form what was thought be the primordial “soup” that existed around a billionth of a second after the Big Bang.

Matter, Anti-matter and Dark Matter (3): What is Dark Matter?

One of the biggest mysteries in modern astronomy is the fact that over 90% of the Universe is invisible. This mysterious missing stuff is known as 'dark matter'. The problem arose when astronomers tried to weigh galaxies. There are two methods of doing this. Firstly, we can tell how much a galaxy weighs just by looking at how bright it is and then converting this into mass using what is called the mass-luminosity relation. The second way is to look at the way stars move in their orbits around the centre of the galaxy. In just the same way that we can calculate the mass of the Sun by knowing how far we are from it and the speed at which we rotate round it we can calculate the mass of a galaxy by studying how fast stars at the very edge move around it. The faster the galaxy rotates, the more mass there is inside it. But when astronomers such as Jan Oort and Fritz Zwicky did these calculations in the early 1930’s the two answers didn't match. As they were very confident that both methods were sound they came to a startling conclusion - there must be a form of matter out there that we cannot see - which became known as 'dark matter'.

So what is dark matter made of? No one knows for sure. Normal matter, making up the stars, planets and ourselves, is made of atoms, which are composed of protons, neutrons and electrons. Scientists call this "baryonic" matter. A small part of the dark matter is of the normal, baryonic variety, including brown dwarf stars, dust clouds and other objects such as black holes that are simply too small, or too dim, to be seen from great distances.

The amount of ordinary or baryonic matter in the Universe, whether visible or dark, can be estimated on the basis of the relative quantity of deuterium and helium that was formed three minutes after the Big Bang. If there was a lot of baryonic matter at that time, collisions first between nucleons and later between nuclei would have been very probable and the percentage of deuterium should now be very small because deuterium nuclei give rise to helium; if instead there was little baryonic matter, the quantity of deuterium should be relatively greater. From the most recent measurements of the current quantities of deuterium and helium, it can be deduced that the baryonic matter present in the Universe is only about one seventh of that needed to keep stars in their galaxies and galaxies in their clusters.

We know that a component of dark matter is in the form of neutrinos which do not have an electric charge and rarely interact with ordinary matter. The estimated mass for neutrinos is very small and if we multiply it by the huge number of neutrinos present in the Universe, we obtain a contribution to the total mass of the Universe which is slightly less than that from visible matter. As they are moving at the speed of light they form what is called “hot dark matter”. If there were too much hot dark matter it would be very difficult for galaxies to form and we would not be here, so this is a comforting, if not surprising, result!

Thus we believe that the major contribution to dark matter is in the form of slowly moving (by comparison) massive particles called “cold dark matter”. Theoretical physicists have come up with various hypotheses as to what these mysterious particles could be. Many of these come out of the physics theory called “supersymmetry”. A good candidate is the neutralino which is the supersymmetric electrically neutral particle which has the lowest predicted mass.

Another possibility could come from nuclearites, combinations of up, down and strange quarks. These would have a higher density than ordinary nuclei and would be stable, even for masses much greater than those of the uranium nuclei. A key goal of the LHC is to attempt to produce such particles. So far, high energy accelerators have not observed any, which implies that they are very massive. As the LHC will be able to produce energies that are far higher than any existing accelerator (and hence be able to create more massive particles) there is hope that dark matter particles might be detected. It is thought that its detection might be quite an early result from the ATLAS and CMS detectors and, even if no detections were made, the LHC results would, at least, eliminate some of the possibilities.

If we know very little about dark matter, we know even less about dark energy! It gives rise, we believe, to a repulsive force that is arises out of the vacuum of space. In fact, Einstein, postulated it in the set of equations derived from his General theory of Relativity that he used to try to explain a static universe – the repulsive force just balancing the attractive force of gravity. He gave it the name of the Cosmological Constant, . When Hubble found that the Universe was expanding he realised that he had made the biggest blunder of his life and, if he had not brought in the repulsive force, he could have predicted that the Universe was expanding. However, observations of very distant Type 1a supernovae made in the late 1990’s indicted that, in complete contrast to the then current ideas of cosmology which predicted that the rate of expansion would be slowing down, the universe was, in fact, expanding at an ever increasing rate - the switch from a slowing expansion rate to an expanding one happening some 4-5 billion years ago. One explanation is that it is the cosmological constant - a constant energy density uniformly filling space - that is the cause. In the current standard model of cosmology - the Lambda-CDM model - dark energy currently accounts for 74% of the total mass-energy of the universe. [CDM is Cold Dark Matter.]

There are, however, other possible scenarios apart from the term which include scalar fields such as quintessence (fifth force) whose energy density can vary in time and space. These can be difficult to distinguish from a cosmological constant effect because the changes may be extremely slow. Very high-precision measurements of the expansion of the universe are required to understand how the expansion rate changes over time. In general relativity, the evolution of the expansion rate is determined by the cosmological equation of state which is the relationship between temperature, pressure, and the combined matter, energy, and vacuum energy density for any region of space. Measuring the equation of state of dark energy is one of the biggest efforts in observational cosmology today.

There are two new planned optical instruments that will help to distinguish between the various possibilities by providing the high precision observations that will be required.

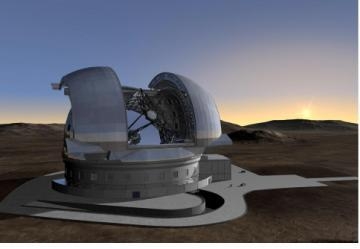

The European Extremely Large Telescope (E-ELT) will be a ground-based astronomical observatory with a 42-meter diameter mirror which will be made up from almost 1000 hexagonal segments. It will be located at Cerro Armazones in Chile which is located 20km from Cerro Paranal, where ESO operates its Very Large Telescope facility. Like Paranal, Armazones will enjoy near-perfect observing conditions - at least 320 nights a year when the sky is cloudless. The Atacama's famous aridity means the amount of water vapour in the atmosphere is very limited, reducing further the perturbation and attenuation starlight experiences as it passes through the Earth's atmosphere.

The telescope will gather 15 times more light than the largest optical telescopes operating today and incorporate an innovative five-mirror design that will use adaptive optics to correct for the turbulent atmosphere so giving exceptional image quality. This will enable it to provide images 15 times sharper than those from the Hubble Space Telescope.

Due to its vast aperture, the E-ELT will help us to elucidate the nature of dark energy by helping to discover and identify distant type Ia supernovae. These are excellent distance indicators and can be used to map out space and its expansion history. In addition to this geometric method the E-ELT will also attempt, for the first time, to constrain dark energy by directly observing the global dynamics of the Universe: the evolution of the expansion rate causes a tiny time-drift in the redshifts of distant objects. The E-ELT will be able to detect this effect in the intergalactic medium - a measurement that will offer a truly independent and unique approach to the exploration of the expansion history of the Universe.

Final go-ahead for the E-ELT is expected in 2011 and it is hoped the telescope can be operational by the end of the decade having cost in the region of a billion euros.

The E-ELT will also search for possible variations over the values of the fundamental physical constants, such as the fine-structure constant and the proton-to-electron mass ratio, over the age of the Universe. An unambiguous detection of such variations would have far-reaching consequences for the unified theories of fundamental interactions and for the existence of extra dimensions of space and/or time. Physics will need to change!

There is another technique for investigating both dark matter and dark energy. Called gravitational lensing, it is the result of a foreground mass such as a galaxy or cluster of galaxies distorting the surrounding space. This makes the light from more distant galaxies lying beyond them to travel in curved lines and the result is to distort their images. There are two types of gravitational lensing - strong and weak. Strong lensing, which is comparatively rare, results when there are very close alignments between the background and foreground objects and multiple images may be produced. In this case it is possible to map out accurate matter distributions for the lensing galaxy cluster and so determine the distribution of the dark matter within it.

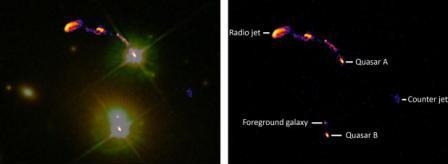

The first strong gravitational lens was discovered by the Lovell Telescope at Jodrell Bank Observatory in 1972. Called the “Double Quasar” it is the result of a single distant quasar being lensed by a foreground galaxy so giving rise to two images. The way that the image of the distant quasar and its jets are distorted tell us about the mass distribution of the matter and dark matter in and around the lensing galaxy. The author was a designer of the MERLIN array and so was very pleased when, on 9th December 2010, the first image made with a major upgrade to the MERLIN array was released. Called e-MERLIN, it has resulted from the linking of the 7 radio telescopes that make up the array by fibre optic cables. This has increased the data rate from each telescope from 128 Mbits per second to 30 Gbits so, coupled with new receivers, gives a significant increase in sensitivity so allowing astronomers to probe deeper into the universe. The first e-MERLIN image was of the double quasar and shows in great detail in the jets emanating from the quasar which will allow a detailed study of the matter distribution that is distorting the image to be made.

The Double Quasar: combined Hubble Space Telescope and e-MERLIN image left, annotated e-MERLIN image right.

The very close alignments that give rise to strong gravitational lensing are very rare and so it requires the detailed observations of large areas of sky to find them. This, along with studies of weak gravitational lensing is a major goal of the Large Synoptic Telescope to be described below.

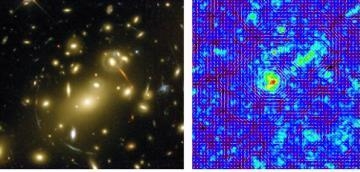

Weak gravitational lensing can best be described with the aid of the famous HST image of the Cluster Abell 2218 below. The mass distribution of the foreground cluster is distorting the images of a distant cluster beyond. In most such cases, the “lens” is not strong enough to form multiple images or giant arcs as we see in the above image. The background galaxies, however, are still distorted! They are stretched and magnified, but by such small amounts that it is hard to measure. For obvious reasons this is called "weak gravitational lensing".

Abell Cluster 2218 and map of the lensing distortions

In principle, weak lensing has the potential to be powerful probe of cosmological parameters, especially when combined with other observations such as the cosmic microwave background, supernovae, and galaxy surveys. Detecting the extremely small effects requires averaging over many background galaxies, so surveys must be both deep and wide, and because these background galaxies are small, the image quality must be very good.

An interesting technique is to use the redshifts of the background galaxies to divide the survey into multiple redshift bins. The low-redshift bins will only be lensed by structures very near to us, while the high-redshift bins will be lensed by structures over a wide range of redshift. This technique, dubbed "cosmic tomography", makes it possible to map out the 3D distribution of mass. Because the third dimension involves not only distance but cosmic time, tomographic weak lensing is sensitive not only to the matter power spectrum today, but also to its evolution over the history of the universe, and the expansion history of the universe during that time. This is a very valuable cosmological probe, and many proposed experiments to measure the properties of dark energy and dark matter have focused on weak lensing.

The key requirement is to make observations over a very wide field with high image quality and the LSST is designed to do just that:-

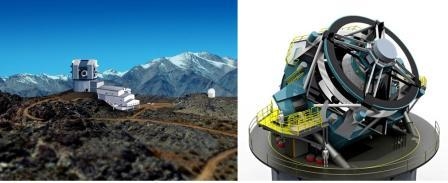

the LSST is a planned wide-field "survey" reflecting telescope that will photograph all the available sky from its Chilean observing site every three nights. It is currently in its design and development phase and it is expected that full science operations for the ten-year survey will begin towards the end of the decade. The telescope will be located on the El Peñón peak of Cerro Pachón, a 2682 metre high mountain in Coquimbo Region, in northern Chile, alongside the existing Gemini South Telescope.

The LSST design is unique among 8m-class primary mirror telescopes in having a very wide field of view. 3.5 degrees in diameter it covers an area of 9.6 square degrees. (This is pretty comparable to a typical 200mm amateur telescope, so for an 8m telescope is amazing!) To achieve this very wide undistorted field of view requires three mirrors, rather than the two used by most existing large telescopes: the primary mirror will be 8.4 meters in diameter, the secondary mirror will be 3.4 metres in diameter, with a 5.0m tertiary mirror, located in a large hole in the primary. The primary/tertiary mirror was built as a monolithic unit and completed at the beginning of September 2008.

To make full use of the image quality across this large field will require a CCD array having 3.2 gigapixels! (A good digital camera has ~10-20 megapixels.) The camera, situated at the prime focus will take a 15-second exposure every 20 seconds. Allowing for maintenance, bad weather, etc., the camera is expected to take over 200,000 pictures per year, far more than can be reviewed by humans and so storing, managing and effectively mining the enormous data output of the telescope is expected to be the most technically difficult part of the project.

In January 2008, software billionaires Charles Simonyi and Bill Gates pledged $20 million and $10 million respectively to the project. It has still to receive the nearly $400 million National Science Foundation grant required to bring it to completion but, in August 2010, a prestigious committee convened by the National Research Council for the National Academy of Sciences ranked the Large Synoptic Survey Telescope (LSST) as its top priority for the next large ground-based astronomical facility.

We now have good evidence that the Big Bang origin of the Universe happened 13.6 thousand million years ago. Observations by the Hubble Space Telescope of the Ultra Deep Field in the constellation Fornax imaged a very faint “smudge” thought to be a very early galaxy. Follow-up observations using one of the 8m telescopes of the VLT in Chile, have enabled a redshift to be found. Published in October 2010, the value was found to be 8.55 – the largest redshift ever observed – and which indicates that the light that we now observe from the galaxy was emitted 13,000 million years ago. The stars that make up the galaxy must have been formed just 600 million years after the Big Bang when the universe was just 4% of its current age.

One of the key questions in astronomy is what happened during this 600 million year period which astronomers call the Cosmological Dark Ages. The name coming from the fact that there was then no visible light in the Universe. Several of the new instruments have key programmes to study how the matter created in the big bang came together to enable the first stars and galaxies to form. In this lecture we will consider two of these:-

During the first few hundred thousand years after the Big Bang the protons (hydrogen nuclei), alpha particles (helium nuclei) and electrons that had been created were unable to form stable atoms as the energy of the photons were then sufficiently high to ionise atoms as soon as they had formed. Finally, as the universe expanded, the photon energy dropped and then, after ~380,000 years, stable hydrogen and helium atoms could form and, soon afterwards, the electromagnetic radiation pervading the universe moved out of the visible part of the spectrum and the universe became dark.

The resulting hydrogen atoms give us, in principle, a way of observing the evolution of the universe during the dark ages as hydrogen emits a spectral line in the radio part of the spectrum. It has a frequency of ~1,400 MHz corresponding to a wavelength of 21cm, so is called the 21cm line. At the time during the dark ages when this wavelength was emitted, the universe was far smaller than now and, as the universe has expanded since then, the wavelength of the hydrogen photons will have lengthened in just the same ratio. [Hence the frequency that we would observe will be far less than 1,400 Mhz.] This is called the cosmological redshift. An important point is that, as one observes further back through the dark ages the redshift will be greater and the frequency lower so, by making observations over a range of appropriate frequencies one is able to observe the universe at different times and so show how the hydrogen gas was able to clump together and finally form the stars and galaxies.

Radio astronomers have been observing hydrogen emission since the 1940’s and it is an incredibly powerful tool in probing the universe, but the signal from the hydrogen emission during the dark ages is very weak. Early in the 1990’s, Peter Wilkinson of Jodrell Bank Observatory, calculated how large a collecting antenna would have to be in order to detect this emission. It turned out that an antenna with a collecting area of about 1 square kilometre would be needed! A collecting area that size would have to be made up of many smaller antennas linked together in a giant array and it is only now that it is possible to envisage the construction of such a giant radio telescope – now called the Square Kilometre Array.

The image above is an impression of the central core of 15x12 m antennas that are envisaged to make up the vast collecting area. Notice that they appear to be located in a desert region. It is vital that they are well away from human habitation as the radio interference (such as from mobile phones) would make it impossible to observe the very faint hydrogen emission in a populated area. Two possible sites are in contention to host the SKA: the Northern Cape in South Africa or the deserts in Western Australia. Smaller, precursor, arrays are already being built in both locations. Site testing continues and it is hoped that a site decision will be made by the end of 2012.

It is expected that funding to build phase 1 of the project will be provided shortly after and this might be carrying out initial science by 2018. All being well, the full array should be completed by 2024 and will be the major radio observatory for this century. It is ranked equally with the E-ELT by the European Astronomical funding agencies. Though it initially arose out of the investigation of the cosmological dark ages, it will be capable of a wide range of other investigations. A full account of the SKA and the science that it will undertake can be found in the “Square Kilometre Array” Wikipedia article written by the author. The SKA will also have a role in the last of the topics covered by this lecture.

The light from the formation of the first stars and galaxies brought the dark ages to an end, but that light can still not be observed in the visible part of the spectrum – again due to the expansion of the universe since then, it is redshifted into the infrared. Infrared observations are difficult to make from the ground as our Earth radiates profusely in the infrared, so an infrared space telescope is what is really required and one is on its way.

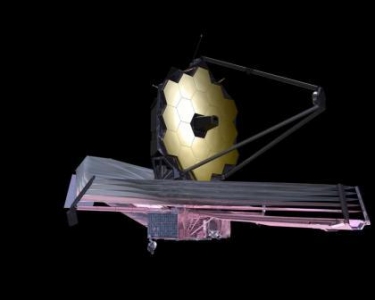

This is NASA’s follow up to the Hubble Space Telescope and named after a former NASA administrator. It is a large, infrared-optimized space telescope, scheduled for launch in 2014. Its large mirror, 6.5 meters (21.3 feet) in diameter, and sunshield the size of a tennis court will need to be erected in space. When launched, the spacecraft will be sent to a location about 1.5 million km (1 million miles) from the Earth directly away from the Sun. The location is called L2 and is a stable point where a spacecraft orbits with the same period of as the Earth. [Normally as one moves further out into the solar system, orbital periods get longer but, if precisely beyond the Earth, the Earth’s gravitational pull adds to that of the Sun and, at just the right distance, the orbital period will be also be one year.]

A major role of JWST is to reveal the story of the formation of the first stars and galaxies in the Universe. Theory predicts that the first stars were 30 to 300 times as massive as our Sun and millions of times as bright, burning for only a few million years before exploding as supernovae. The emergence of these first stars marked the end of the "Dark Ages" in cosmic history and an understanding these first sources is critical, since they greatly influenced the formation of later objects such as galaxies. When the first massive stars exploded as supernovae their remnants would have been black holes. These, initially small, black holes started to swallow gas and other stars and merged to become the huge black holes that are now found at the centres of massive galaxies. It is hoped that the JWST will shed light on the nature of the relationship between the black holes and the galaxies that host them.

Even now, astronomers do not really know how the galaxies formed, what gives them their shapes and what happens when small and large galaxies collide or join together. The JWST will hopefully answer these questions. By studying some of the earliest galaxies and comparing them to today’s galaxies we may be able to understand their growth and evolution. It will also allow scientists to gather data on the types of stars that existed in these very early galaxies. Follow-up observations using spectroscopy of hundreds or thousands of galaxies will help us understand how elements heavier than hydrogen were formed and built up as galaxy formation proceeded through the ages. These studies will also reveal details about merging galaxies and shed light on the process of galaxy formation itself.

Stars and their planetary systems form together in thick dust clouds which makes it almost impossible to study their formation at visible wavelengths. Almost all of the obscuring gas and dust seen in visible images views may entirely disappear when viewed in the infrared, so that the proto-stars lying behind the gas and dust become easier to see. So infrared astronomy can penetrate dusty regions of space such as molecular clouds and observe the young stars forming within. As indicated above, infrared observations are also key to observing young stars in the early Universe as the visible light radiated by them will have been shifted down to infrared wavelengths by the expansion of the Universe that has occurred since the light was emitted.

The James Webb Space Telescope with its excellent imaging and spectroscopic capabilities will allow us to study the formation of stars and will also be able to image the proto-planetary disks around stars helping us to understand how planets may form in orbit around them. These studies will also be greatly helped by the high resolution imaging in the wavelength bands just beyond the infrared - the sub-millimetre and millimetre parts of the electromagnetic spectrum – that will be achieved using …….

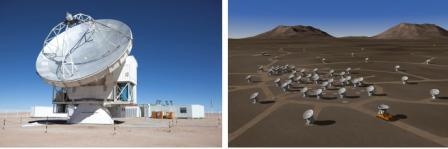

One of 64, 12m, antennas and an artist’s impression of their layout in the Atacama Desert.

ALMA will provide unprecedented sensitivity and resolution to study gas and dust emission in the millimetre and sub-millimetre bands. It will be a single telescope of revolutionary design, composed initially of 66 high precision antennas operating at wavelengths of 0.3 to 9.6 mm. It is being built in the Atacama desert in the Chilean High Andes at a height of 5100 m at the Llano de Chajnantor Observatory. ALMA’s chosen high location is due to the fact that water vapour emits and absorbs at sub-millimetre wavelengths. The Atacama desert is one of the driest locations on Earth, but can often suffer from wind speeds of up to 100 mph – hence the very solid antenna construction shown in the image above.

The antennas can be moved across the desert plateau giving separations of up to 16 km. This gives ALMA a powerful variable "zoom", similar in its concept to that employed at the VLA site in New Mexico, US - some observations being best made with a compact fully filled array whilst others benefit from the greater resolution given by a more spread out sparse array. The impressive total collecting area given by the large number of telescopes provides its high sensitivity.

The telescopes are provided by the European, North American and East Asian partners of ALMA. The American and European partners have each placed orders for twenty-five 12-meter diameter antennas (of two different designs for political reasons) to make up the main array. East Asia is contributing 16 antennas (four 12-meter diameter and twelve 7-meter diameter antennas) to produce the Atacama Compact Array (ACA). By using smaller antennas than ALMA, this allows larger fields of view to be imaged at one time. The ability to move them closer together also results in the possibility to image sources of larger angular extent. The ACA will also work together with the main array in order to enhance the latter's wide-field imaging capability.

The first science observations are scheduled to begin in the second quarter of 2011.

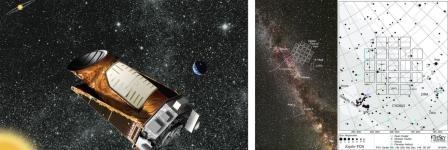

We are beginning to realise that life could exist in wider variety of locations than once thought. Such as, for example, in an ocean beneath the ice crust of a satellite kept liquid by tidal heating due to its proximity to a giant planet. [Europa, a satellite of Jupiter, is one such example.] However, the most likely location for advanced life would be on the surface of a planet, such as our Earth, within the habitable zone of its star (so not too hot or too cold and where water could exist as a liquid on the surface). One of the “holy grails” of astronomy would thus be the discovery of other earth-like planets within their star’s habitable zone. As yet, none have been discovered, but a spacecraft called KEPLER (after Johannes Kepler who formulated the empirical laws of planetary motion) is now observing part of the Milky Way close to the constellations of Cygnus and Lyrae and is expected to discover such planets in the next few years. The stars in this region of the sky are, as in the case of the Sun, slightly off the plane of the galaxy and will be at roughly the same distance from the galactic centre. This is significant as, rather like the habitable zone of a star, it has been suggested that there may be a habitable zone of the Galaxy which would, of course, include our Sun.

KEPLER is simultaneously observing over 140,000 stars and looking for the slight drop in brightness as a planet passes across the disk of its parent star. (As, for example, in the transit of Venus across the face of the Sun in 2004.) An earth-sized planet is expected to result in a drop in brightness of order 0.01% in the brightness of its sun. The reduction in brightness enables one to deduce the diameter of the planet, and the interval between transits enables the planet's orbital period to be found. Using models of stellar radiation and knowing the planet’s distance from its sun enables its surface temperature to be calculated, so determining whether liquid water could exist on the surface.

Artist’s impression of the KEPLER spacecraft and a diagram showing the area of sky where over 140,000 stars are being observed

KEPLER can only detect planets whose orbital plane lies in the line of sight and the calculated probability of an Earth-like planet at 1 AU transiting a sun-like star is 0.465%, or about 1 in 215. As the planets in a solar system tend to lie in one plane, there is a good chance that, if one planet were detected, others is the same solar system would be as well.

Kepler began science observations in May 2009, having spent time optimising the positions and tilt of its ~1m primary mirror. It is now measuring the brightness of the target stars every 30 minutes to detect the planetary transits. If 10% of the stars observed had an Earth-like planet it would be expected to detect about 46. And the results, after its mission (expected to exceed 3.5 years in length) is completed will determine how often Earth-like planets orbit other stars.

The first five planet discoveries by Kepler were announced in January 2010: four were around 15 times the diameter of the Earth and thus larger than Jupiter (11 Earth diameters), but one was just 4 times the Earth’s diameter. We cannot expect that any Earth-like planets will be announced in the near future as at least three transits need to be observed to be sure that the dimming was caused by a planet. If a planet is orbiting a star like our Sun and lies in its habitable zone (like our Earth), it will have a similar orbital period and so a period of at least 2 years of observations will be needed to observe three transits. We should thus not expect any such discovery announcements for a year or so and typically three years of observations will be needed. However we know that many stars have Jupiter sized planets in close orbits around them and these would have been detected within a few months of operation. To date, NASA has released data on 312 planetary candidates found by Kepler and is expected to release data on a further 400 in January 2011.

Once a target list of Earth-like planets has been produced, then infra-red telescopes, such as the James Webb Space Telescope, will be able to study their atmospheres. The Earth’s atmosphere has a complex infrared spectrum showing evidence of water vapour, carbon dioxide and, significantly, ozone. Ozone can only exist in an atmosphere if it contains free oxygen. As oxygen is highly reactive, free oxygen can only exist long term if it is being replenished by some mechanism - in our case photosynthesis. Thus the discovery of water vapour and ozone in the atmosphere of another planet would indicate that some form of life was present!

One way that we might detect the presence of advanced life is if we detected a radio signal from an advanced civilisation. This could be unintentional as if, for example, we detected a radar signal. More likely, it could be a contact signal deliberately beamed at us (as they have seen the presence of ozone in our atmosphere and suspected that life would exist). This study is called SETI - the Search for Extra Terrestrial Intelligence - whose first observations were made by Frank Drake at the Greenbank Observatory in West Virginia in 1960. However, even the most sensitive observations yet undertaken (in Project Phoenix using the Lovell Telescope at Jodrell Bank in combination with the giant Arecibo Telescope in Puerto Rico) we have only searched out to a distance of 200 light years – a tiny part of the Milky Way galaxy! When the Square Kilometre Array is (hopefully) completed in 2024 it would have the sensitivity to detect signals beamed at us from across the galaxy and thus might, one day, show us that we are not alone in the Universe.